The Evolution of Hardware in Chat GPT: A Closer Look

The Evolution of Hardware in Chat GPT: A Closer Look

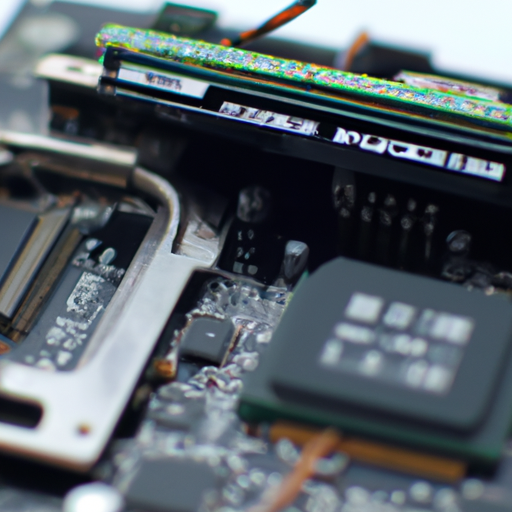

Have you ever wondered what goes on behind the scenes of a chatbot like Chat GPT? While the software and algorithms are undoubtedly crucial, the hardware that powers these intelligent systems is equally important. In this article, we will take a closer look at the evolution of hardware in chat GPT and how it has contributed to the advancements we see today.

In the early days of chatbots, the hardware used to run them was relatively simple. These systems were typically hosted on a single server, which had limited processing power and memory. As a result, the chatbots of yesteryears were often slow and struggled to handle complex conversations.

However, with the rapid advancements in technology, the hardware used in chat GPT has come a long way. Today, these systems rely on powerful hardware configurations that enable them to process vast amounts of data and generate responses in real-time. This evolution has been driven by the increasing demand for more sophisticated chatbots that can understand and respond to human-like conversations.

One of the key components of the modern hardware setup for chat GPT is the use of graphics processing units (GPUs). GPUs are specialized processors that excel at performing complex mathematical calculations in parallel. They are particularly well-suited for tasks like natural language processing, which require massive amounts of computation. By harnessing the power of GPUs, chat GPT can process and analyze text at an unprecedented speed, allowing for more interactive and engaging conversations.

Another important aspect of the hardware evolution in chat GPT is the use of distributed systems. Instead of relying on a single server, modern chatbots are often deployed on clusters of servers that work together to handle the computational load. This distributed architecture allows for greater scalability and reliability, ensuring that the chatbot can handle a large number of concurrent users without experiencing performance issues.

Furthermore, advancements in memory technology have also played a significant role in improving the performance of chat GPT. Traditional hard disk drives (HDDs) have been largely replaced by solid-state drives (SSDs), which offer faster read and write speeds. This means that the chatbot can access and retrieve information from its memory much more quickly, resulting in faster response times and a smoother user experience.

In addition to GPUs, distributed systems, and SSDs, the hardware setup for chat GPT often includes other components such as high-speed network connections and specialized hardware accelerators. These accelerators are designed to offload specific tasks from the main processor, further enhancing the system’s performance.

As we look to the future, the evolution of hardware in chat GPT is set to continue. Researchers and engineers are constantly exploring new technologies and techniques to push the boundaries of what is possible. From more powerful GPUs to advanced neural processing units (NPUs) specifically designed for AI workloads, the hardware landscape is evolving rapidly to meet the growing demands of chatbot technology.

In conclusion, the hardware behind chat GPT has come a long way, enabling more sophisticated and intelligent conversations. The use of GPUs, distributed systems, SSDs, and other specialized components has revolutionized the performance and capabilities of chatbots. As technology continues to advance, we can expect even more exciting developments in the hardware that powers these intelligent systems, bringing us closer to truly human-like interactions.

Exploring the Role of Processors in Chat GPT’s Hardware Architecture

Inside the Silicon Brain: The Hardware Behind Chat GPT

When we interact with Chat GPT, the impressive language model developed by OpenAI, we are often left in awe of its ability to generate coherent and contextually relevant responses. But have you ever wondered about the hardware that powers this remarkable AI system? In this article, we will delve into the role of processors in Chat GPT’s hardware architecture, giving you a glimpse into the silicon brain that makes it all possible.

At the heart of Chat GPT’s hardware lies a powerful network of processors. These processors are responsible for executing the complex computations required to process and generate language. To handle the enormous amount of data and perform computations at lightning speed, Chat GPT relies on a distributed architecture, where multiple processors work in tandem.

One of the key components in Chat GPT’s hardware architecture is the central processing unit (CPU). The CPU acts as the brain of the system, coordinating and executing instructions. It performs tasks such as parsing input, executing algorithms, and managing memory. With its high clock speeds and multiple cores, the CPU ensures that Chat GPT can handle a large number of user queries simultaneously.

In addition to the CPU, Chat GPT also utilizes graphics processing units (GPUs) to accelerate its computations. GPUs are specialized processors designed to handle parallel tasks efficiently. They excel at performing repetitive calculations, making them ideal for the matrix operations involved in natural language processing. By harnessing the power of GPUs, Chat GPT can process and generate responses with remarkable speed and accuracy.

To further enhance its performance, Chat GPT incorporates field-programmable gate arrays (FPGAs) into its hardware architecture. FPGAs are highly flexible chips that can be reprogrammed to perform specific tasks. By leveraging FPGAs, Chat GPT can offload certain computations from the CPU and GPU, freeing up resources and improving overall efficiency. This allows for faster response times and a more seamless user experience.

Another crucial component in Chat GPT’s hardware arsenal is the application-specific integrated circuit (ASIC). ASICs are custom-designed chips optimized for specific applications. In the case of Chat GPT, ASICs are tailored to handle the unique requirements of natural language processing. By utilizing ASICs, Chat GPT can achieve even greater performance gains, as these chips are purpose-built to execute the complex algorithms involved in language generation.

To ensure smooth communication between the various processors, Chat GPT relies on a high-speed interconnect network. This network enables fast data transfer and synchronization between different components, allowing for efficient collaboration and parallel processing. By minimizing latency and maximizing bandwidth, the interconnect network plays a crucial role in maintaining the responsiveness and real-time nature of Chat GPT.

In conclusion, the hardware behind Chat GPT is a marvel of engineering, combining the power of CPUs, GPUs, FPGAs, ASICs, and a high-speed interconnect network. These components work together seamlessly to process and generate language, enabling Chat GPT to provide intelligent and contextually relevant responses. As AI continues to advance, we can expect further innovations in hardware architecture, pushing the boundaries of what is possible in natural language processing. So the next time you engage in a conversation with Chat GPT, remember the intricate silicon brain that powers its remarkable abilities.

Memory Management in Chat GPT: Understanding the Hardware Requirements

Have you ever wondered how Chat GPT, the impressive language model developed by OpenAI, is able to generate such coherent and contextually relevant responses? The answer lies in its sophisticated hardware, specifically its memory management system. In this article, we will delve into the intricacies of memory management in Chat GPT and explore the hardware requirements that make it all possible.

Memory management is a critical aspect of any language model, as it determines the model’s ability to retain and recall information. In the case of Chat GPT, its memory management system is designed to handle vast amounts of data, allowing it to generate responses that are not only accurate but also contextually appropriate.

To achieve this, Chat GPT relies on a combination of RAM (Random Access Memory) and storage devices. RAM plays a crucial role in the model’s ability to quickly access and manipulate data. It acts as a temporary workspace where the model can store and retrieve information during its processing. The larger the RAM capacity, the more data Chat GPT can hold in its working memory, enabling it to generate more accurate and coherent responses.

However, RAM alone is not sufficient to meet the memory requirements of Chat GPT. The model also relies on storage devices, such as solid-state drives (SSDs) or hard disk drives (HDDs), to store and retrieve data that exceeds the capacity of RAM. These storage devices provide a larger and more permanent memory space for Chat GPT to access when needed.

The hardware requirements for memory management in Chat GPT are substantial. To ensure optimal performance, the model typically requires a high-capacity RAM, ranging from tens to hundreds of gigabytes. Additionally, the storage devices used should have sufficient capacity to accommodate the vast amount of data that Chat GPT needs to access.

Another crucial aspect of memory management in Chat GPT is the speed at which data can be accessed and retrieved. The model’s ability to generate responses in real-time relies on the efficiency of its memory system. Therefore, it is essential to use high-speed RAM and storage devices to minimize latency and ensure smooth operation.

Furthermore, the hardware used for memory management in Chat GPT must be scalable. As the model is trained on increasingly larger datasets, its memory requirements also grow. To accommodate this growth, the hardware must be capable of expanding its memory capacity without compromising performance. This scalability ensures that Chat GPT can continue to generate accurate and contextually relevant responses as its memory demands increase.

In conclusion, memory management is a crucial aspect of Chat GPT’s hardware architecture. The model’s ability to generate coherent and contextually relevant responses relies on its sophisticated memory system, which combines high-capacity RAM and storage devices. The hardware requirements for memory management in Chat GPT are substantial, necessitating large RAM capacities and high-speed storage devices. Additionally, the hardware must be scalable to accommodate the model’s growing memory demands. By understanding the intricacies of memory management in Chat GPT, we gain insight into the impressive hardware that powers this remarkable language model.

The Importance of GPUs in Powering Chat GPT’s Neural Networks

Have you ever wondered how chatbots like Chat GPT are able to generate such realistic and coherent responses? The secret lies in the powerful hardware that powers these neural networks. In particular, Graphics Processing Units (GPUs) play a crucial role in enabling Chat GPT to process and generate responses at lightning-fast speeds.

GPUs are specialized hardware components that are designed to handle complex mathematical computations. Traditionally, GPUs were primarily used for rendering graphics in video games and other visual applications. However, their parallel processing capabilities have made them indispensable in the field of artificial intelligence.

Neural networks, the backbone of Chat GPT, consist of interconnected nodes or “neurons” that process and transmit information. These networks are trained on vast amounts of data to learn patterns and make predictions. However, training and running these networks can be computationally intensive, requiring significant processing power.

This is where GPUs come in. Unlike Central Processing Units (CPUs), which are general-purpose processors, GPUs are specifically optimized for parallel processing. They are capable of performing multiple calculations simultaneously, making them ideal for handling the massive amounts of data that neural networks require.

The parallel architecture of GPUs allows them to break down complex computations into smaller, more manageable tasks that can be executed simultaneously. This greatly speeds up the training and inference processes of neural networks. In the case of Chat GPT, GPUs enable the model to process and generate responses in real-time, providing users with a seamless and interactive chat experience.

The importance of GPUs in powering Chat GPT’s neural networks cannot be overstated. Without the computational power provided by GPUs, training and running these networks would be prohibitively slow and inefficient. Users would have to wait much longer for responses, and the overall user experience would suffer.

Furthermore, GPUs also play a crucial role in enabling the scalability of Chat GPT. As the demand for chatbot services grows, the hardware infrastructure must be able to handle increasing workloads. GPUs, with their parallel processing capabilities, allow for efficient scaling of neural network computations, ensuring that Chat GPT can handle a large number of concurrent users without sacrificing performance.

In recent years, there have been significant advancements in GPU technology, with companies like NVIDIA leading the way. These advancements have resulted in GPUs that are more powerful and energy-efficient than ever before. This means that chatbot developers can leverage these advancements to create even more sophisticated and responsive AI models.

In conclusion, GPUs are an essential component in powering the neural networks that drive chatbots like Chat GPT. Their parallel processing capabilities enable these networks to process and generate responses at lightning-fast speeds, providing users with a seamless and interactive chat experience. As the demand for chatbot services continues to grow, the importance of GPUs in enabling scalability cannot be overstated. With ongoing advancements in GPU technology, the future looks bright for chatbots and the AI-powered conversations they facilitate.

Scalability and Efficiency: Hardware Considerations for Chat GPT’s Performance

Inside the Silicon Brain: The Hardware Behind Chat GPT

Have you ever wondered how Chat GPT, the revolutionary language model developed by OpenAI, is able to generate such coherent and contextually relevant responses? The answer lies in the powerful hardware that powers this cutting-edge technology. In this article, we will delve into the world of silicon brains and explore the hardware considerations that contribute to Chat GPT’s impressive performance.

Scalability is a crucial factor when it comes to language models like Chat GPT. The ability to handle large amounts of data and process it efficiently is what sets this technology apart. To achieve this, OpenAI relies on a distributed computing infrastructure that consists of thousands of powerful GPUs (Graphics Processing Units). These GPUs work in tandem to handle the massive computational load required for training and inference tasks.

Efficiency is another key aspect that plays a vital role in Chat GPT’s performance. OpenAI has carefully designed the hardware infrastructure to optimize power consumption and minimize latency. By utilizing specialized hardware accelerators, such as Tensor Processing Units (TPUs), Chat GPT can perform complex calculations with remarkable speed and energy efficiency. This ensures that users can enjoy a seamless conversational experience without any noticeable delays.

The hardware behind Chat GPT is not limited to just GPUs and TPUs. OpenAI also leverages high-speed networking infrastructure to enable efficient communication between different components of the system. This allows for seamless data transfer and synchronization, ensuring that the distributed computing resources work together harmoniously. By minimizing communication overhead, OpenAI maximizes the overall efficiency of the system.

To further enhance scalability and efficiency, OpenAI employs advanced techniques like model parallelism and data parallelism. Model parallelism involves splitting the model across multiple GPUs, allowing each GPU to focus on a specific portion of the model. This enables efficient utilization of computational resources and facilitates the training of larger models. On the other hand, data parallelism involves splitting the training data across multiple GPUs, allowing for parallel processing of different data samples. This technique significantly speeds up the training process and improves overall efficiency.

In addition to the hardware considerations, OpenAI also places great emphasis on software optimization. The software stack that powers Chat GPT is meticulously designed to take full advantage of the underlying hardware. This includes utilizing low-level optimizations, such as mixed-precision training, which reduces memory requirements and speeds up computations. OpenAI also invests in continuous research and development to improve the efficiency and performance of their models, ensuring that Chat GPT remains at the forefront of natural language processing technology.

In conclusion, the hardware behind Chat GPT plays a crucial role in its scalability and efficiency. OpenAI’s distributed computing infrastructure, powered by thousands of GPUs and specialized accelerators like TPUs, enables the model to handle large amounts of data and process it with remarkable speed and energy efficiency. Advanced techniques like model parallelism and data parallelism further enhance scalability and optimize resource utilization. Combined with software optimizations and continuous research, the hardware behind Chat GPT ensures that users can enjoy a seamless and engaging conversational experience. So, the next time you interact with Chat GPT, remember that behind its impressive capabilities lies a powerful silicon brain.